AI Red Teaming Has An OPSEC Problem

Don’t buy, invest in, or pay for a course about “AI red teaming” until you read this | Part II | Edition 30

Disclaimer: The techniques described here are for INFORMATIONAL PURPOSES ONLY. Do NOT use these techniques to violate the law. You assume sole responsibility for your research & application of these techniques. Do NOT manipulate AIML systems to which you have not been granted written permission to test.

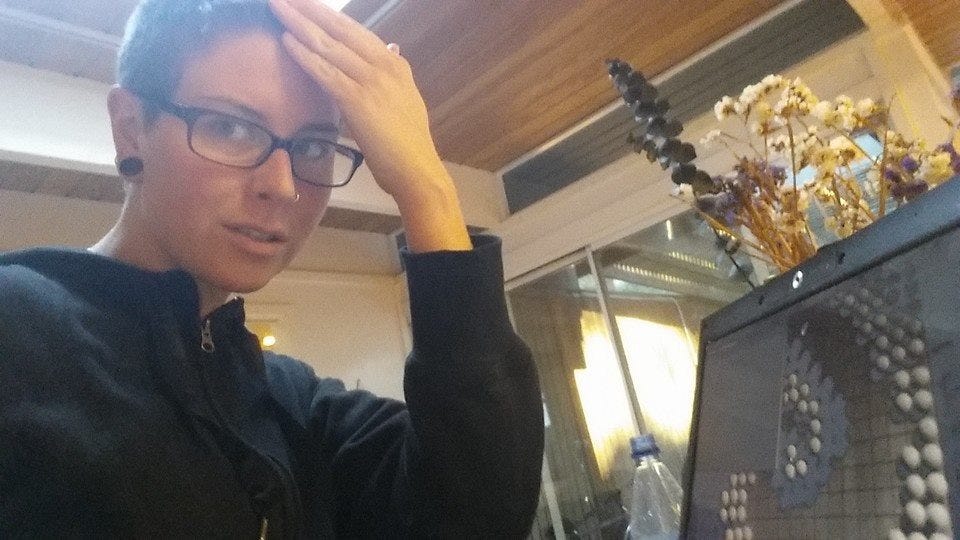

Photo: Me circa 2015, grappling with the realization that the attack playground is infinite, the compute is nearly free, and there’s no one who knows how to defend against me. I was so worried about NATSEC and OPSEC that I waited 7 years just to publish a defense.

Not only is “AI red teaming” mostly security theater, but it actually makes systems more vulnerable.

How?

Because of the game theoretic constraints introduced by the mathematical realities of the underlying system architectures.

I’ll unpack this in a moment. For now, let’s recap:

In my last post, I promised you that I would talk about how the EchoGram vulnerability disclosure reveals AI red teaming’s serious, intractable OPSEC problems.

And now I’m going to do exactly that.

But first a reminder: To understand AI security from either an attack or defense perspective, you first need to understand the adversarial subspace problem.

Once you have a conceptual handle on the nearly infinitely large attack spaces each model has–and how they overlap–you are ready to start constructing nation-state level attacks.

Not spraying prompts. The real kind of attacks.

Pay attention to the techniques I’m going to describe here. They’re going to become important when I tell you how to put all this together to really attack AI in a few days.

For now: Let’s go.

Disclosing the Unpatchable

The researchers who published the EchoGram attack used publicly available datasets to construct their attacks.

To many security types, this doesn’t sound like news.

Scanning disclosed vulnerabilities and finding places where patches haven’t been applied is the old-fashioned kind of tradecraft that just about everyone is familiar with.

If you describe AI attacks like EchoGram, you’ll typically hear this refrain: There’s a tension between disclosure and security.

The argument is usually that “responsible disclosure” makes everyone safer–even though it runs the risk of alerting hackers to vulnerabilities that may remain unpatched.

But AI is different.

Disclosing the attacks–like prompts–that were successful against any system does nothing to make anyone safer.

In fact, it makes things considerably worse.

The key to understanding why lies in two important facts:

First, these attacks are not patchable. There is no security fix.

The attacks are nearly infinite. You can never defend against them all. Our work on quantifying transfer attack risk shows the math, and EchoGram demonstrates it in action.

Hardening against one prompt or even 50,000 prompts likely leaves a nearly infinite amount of variances unpatched.

This might still sound like a worthwhile endeavor–isn’t fixing some vulnerabilities better than fixing none?

The answer is a resounding no. It’s not.

And the reason for that comes down to our second consideration:

The game theory of adversarial AI.

An Infinity of Attacks, Cut Down To Size

In an infinite search space, where do attackers start?

This is more than a theoretical question. It has very real time & computational constraints attached to it.

The nearly infinite adversarial search spaces work against attackers, too–unless they know how to flip the constraints of the game into an advantage.

To cut through a high-dimensional, near-infinitely-large space, you need two things: A starting point, and a plan.

In a perfect world, as an attacker you’d want to already know what’s off limits–what’s already defended. In the real world, this kind of intel rarely lands in your inbox. At least that’s true for traditional security.

In AI security, it’s just a google search away.

Remember how the EchoGram researchers found public datasets and analyzed them to create vulnerabilities?

As an attacker, you don’t have to do nearly that much work.

This is because prominent “AI red teams” put libraries of prompts right out on the open internet. Proudly. For anyone to use.

How do you, a hypothetical attacker, make use of this?

You pick one prompt, and iterate.

That’s it.

EchoGram demonstrated it: Adding a three-character suffix changes a defended prompt into a new attack.

And our paper shows that these attacks will never stop popping up.

The prompt gives you a start. Math gives you the plan.

Infinity is now manageable, and time is on your side.

That’s game theory, baby.

That “defended” prompt just became the key that lets an attacker into the entire system.

And now I’m going to tell you how to do it.

No Such Thing As A Closed Box In AI

These techniques are for informational purposes only. These actions may be ILLEGAL in your location. The information presented here does NOT constitute advice. DO NOT do these steps.

Here are the steps, which, and I cannot stress this enough, you should absolutely not do:

[1] Google your favorite celebrity “AI red team” and find their LinkedIn(s) and business site

This will give you a list of their clients. If that doesn’t work, check their site for logos. If that doesn’t work, go over their interviews, their youtube, their tiktok, etc.

Anywhere they will most likely be bragging. Because they will, at some point, reveal their clients. And then it’s open season.

[2] Now find their repo of prompts on Github or wherever they keep it

Pick a prompt–any prompt–and iterate. Do so programmatically, until you have created between 1 and 10,000 new valid attacks. Repeat for as many prompts as you feel you want to spend the time/compute on.

Maybe make a million new attacks for fun, because why not? Now repeat, because you can do this forever, and this compute is almost free.

[3] Attack.

It is that devastatingly simple.

As an attacker, I would never create a natural language prompt to attack AI.

I, as an attacker, would simply iterate off of one of these prompts in the nearly infinite attack space.

I can iterate only slightly from one or more of your prompts by:

- Vectorizing them

- Finding any combination of symbols which carries a similar vectorization

- Perturb this *slightly*

- It will look like nonsense to a human

- It will look like an attack to a machine

- Repeat

You, a celebrity “AI red teamer” did all my work for me.

Thanks!

By programmatically following these steps, I’ve just manufactured an entire suite of attacks which I know are undefended.

Because you told me.

Ethics Before Ego

Do the AI red teams” do this because they don’t understand what they’re doing?

Or is it because they just don’t care?

I am not a mind reader, so I won’t pretend to know.

Here are some things that I do know.

In 2022, after spending ten years attacking AI and seeing no changes, I published a paper that remains the SOTA for defending Agentic and any other AI system from attacks.

I took a lot of flak for it, because defense in security isn’t sexy, and nobody in 2022 seemed to understand the security problems of AI.

Why did I publish a paper on securing AI instead of showboating attacks?

Because that’s what needed to be done.

As a security professional, I feel that it’s important to understand the technical systems I claim to test.

And as a security professional, I feel it’s a matter of morals/ethics/whatever you want to call it to put the client’s OPSEC before my need for ego validation.

If you maintain a public repo of prompts you use to test, you are endangering every client you ever had.

Full stop.

Even worse: Since human creativity is limited, there’s no way all these “AI red teams” are creating unique prompts. There is absolutely going to be overlap.

And there’s no way they are keeping meaningful separation between a public and private list. It can’t be done. The game theory doesn’t support it, and the math doesn’t work out.

Even worse: There is going back, because the internet is forever, and the prompts that were published still represent unpatchable vulnerabilities.

You can’t unring that bell.

We can never go back.

GG everybody.

Every “AI red teamer” and their repo of prompts just became the industry’s worst AI security vector.

And this is why AI red teaming has an OPSEC problem.

If you paid for an AI red team to assess your AI security, they are likely your biggest AI security liability.

Stay frosty.

The Threat Model

Public libraries of prompts provide an infinite well of novel attacks.

Public libraries of prompts tell attackers exactly what’s undefended, a rarity in traditional offensive security but a regular occurrence in AISEC.

Maintaining a public repo of prompts endangers clients, and makes AI red teamers into the enterprise’s biggest AI security liability.

There is no going back–unpublishing the prompts will not help because the internet is forever, and the vulnerabilities they represent remain unpatchable. There is no undoing the damage caused.

Resources To Go Deeper

Manshaei, Mohammad Hossein, Quanyan Zhu, Tansu Alpcan, Tamer Başar and Jean-Pierre Hubaux. “Game theory meets network security and privacy.” ACM Comput. Surv. 45 (2013): 25:1-25:39.

Tambe, Milind. “Security and Game Theory - Algorithms, Deployed Systems, Lessons Learned.” (2011).

Do, Cuong T., Nguyen H. Tran, Choong Seon Hong, Charles A. Kamhoua, Kevin A. Kwiat, Erik Blasch, Shaolei Ren, Niki Pissinou and Sundaraja Sitharama Iyengar. “Game Theory for Cyber Security and Privacy.” ACM Computing Surveys (CSUR) 50 (2017): 1 - 37.

Executive Analysis, Research, & Talking Points

Attacking AI Agents With OPSEC Fails

How can you use the techniques I’ve described to attack AI Agents? Start with the where:

Attack at every level of the Agentic stack.

Here’s how:

Keep reading with a 7-day free trial

Subscribe to Angles of Attack: The AI Security Intelligence Brief to keep reading this post and get 7 days of free access to the full post archives.