MIT Says Your AI Project Is Probably Going To Fail

A new report from Forbes & MIT shows that almost all AI projects fail. With 95% failure rates and billions invested, what separates those who get ROI from the ones who lose big?

Let’s cut to the chase: Your AI project probably has a 5% chance of success.

Forbes is reporting MIT research showing that 95% of AI projects never make it into production.

Let that sink in.

This isn’t my opinion, nor is it a bunch of Luddites. This is MIT.

In fact, this isn’t an opinion at all–it’s a matter of engineering.

But why? Weren’t we all supposed to be 10xing ourselves into vibe-coded billions?

I’ve written before about how the AI attack surface is massive and how AI red teaming can never find, much less fix, all of GenAI & Agentic AI’s woes.

We’ve also looked at how AI security introduces new ways to attack that traditional security never had to consider: data as an attack vector, mathematically intractable (and thus unpatchable) foundational security flaws, and the ever-expanding, architecturally-dependent attack surface that Agentic AI presents.

But one thing that I can’t say often enough: The systems that secure AI, are the same systems required to get it into production.

MIT & Forbes are now saying what I’ve been saying for years: The engineering principles that secure and ensure the operation of mission-critical software can and should be applied to production AI. That is, if you want it to work.

But that’s not the narrative that most people were sold.

Reality Vs The Hype: Who’s Right?

It’s easy to say that the promises of GenAI haven’t lived up to the hype. If you visit SOCMED like LinkedIn, you’ll find a number of these takes.

And they’re not wrong–exactly.

But there’s more nuance.

This is why their stereotyped opponents–the AI boosters, if you will–are quick to point out the dismissive nature of this argument. AI does things, they’re insisting, and if we can just figure out how, then AI can do things for us.

And to be quite honest, there’s truth in that too–but again, with nuance.

Because what was sold to most people wasn’t a nuanced technology that needed careful engineering and consideration to even get it into prod.

What was sold to countless business, military, government, and other leaders was a promise of easy human augmentation to outright workforce replacement–none of which has materialized.

And this is for a reason.

What many, if not most leaders who are now facing these losses failed to recognize is a difficult truth that every generation seems to have to learn the hard way:

There’s no free lunch.

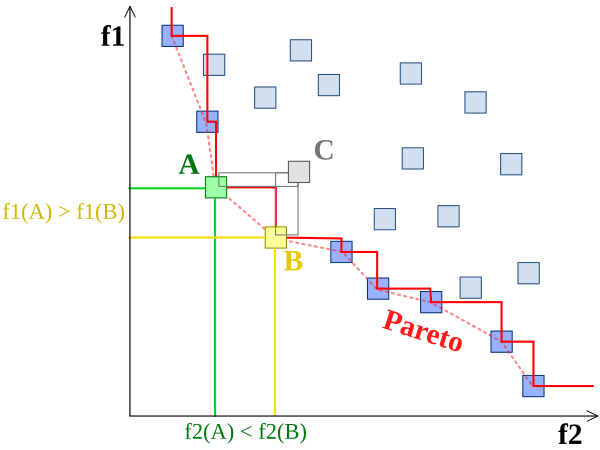

(Ironically, any AI engineer with a passing familiarity of Pareto/Multi-Objective Optimization already knew this. But I digress)

Nothing is for free. Not to get all woo, but this is a principle of the universe.

A Pareto frontier. Source: Wikipedia

Invest In Your People And Ops Now, Or Pay Later

So how does this apply to AI?

Let me be blunt: Any ROI you can expect from your AI systems will be a net against the initial investment in infrastructure. This includes talent.

Put another way, you are not going to get money out of AI before you put serious money in. This includes investing in the expertise to build MLOps/LLMOps and Agentic Ops.

This doesn’t happen by magic or vibes. And 95% of organizations are finding out the hard way.

AI is not a joke. Nor is it magic. It’s serious math, and it requires serious engineering to productionize. I’m sorry if someone told you otherwise.

It’s an uncomfortable fact that might hurt some feelings. And I’m fine with that.

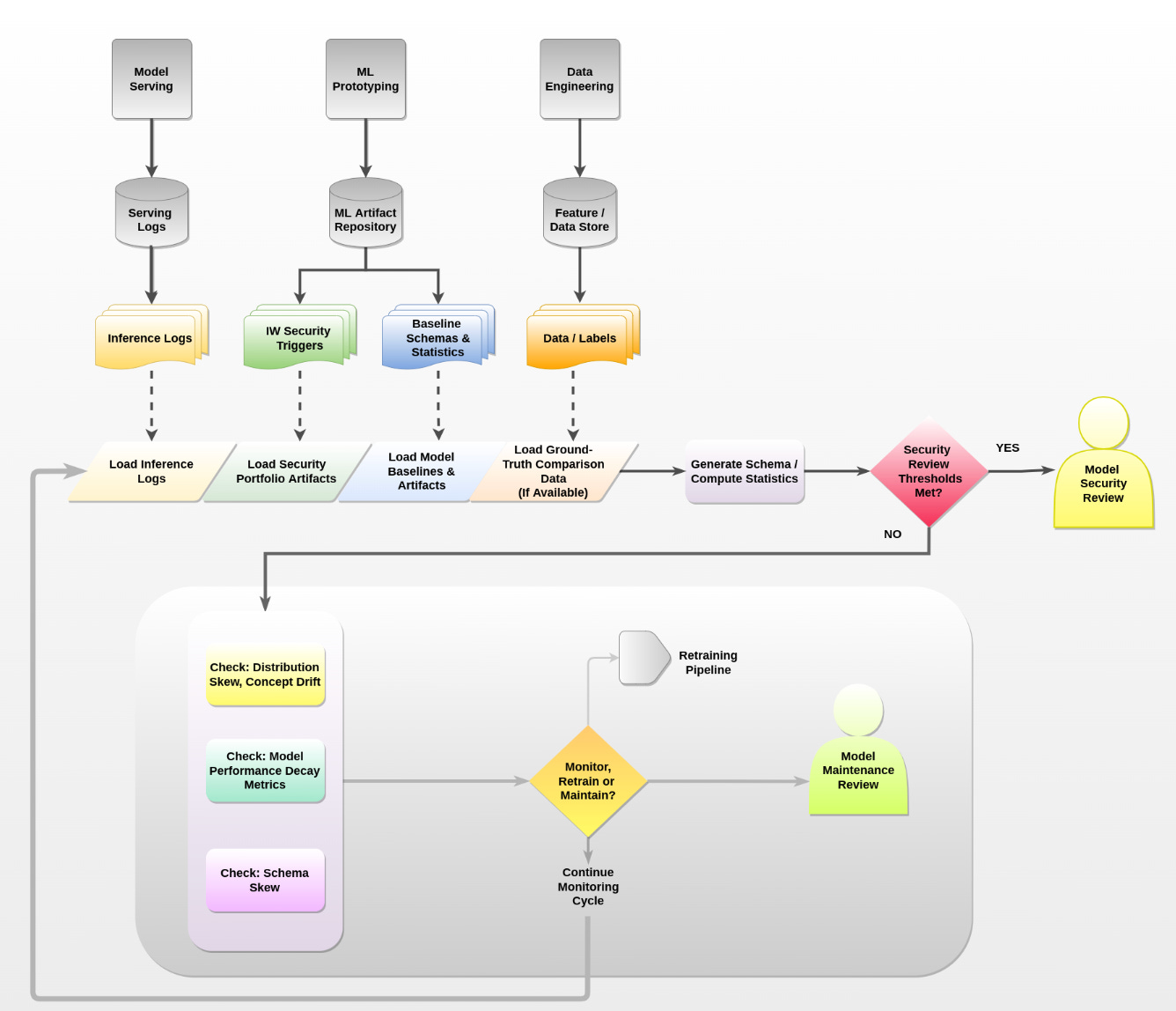

A continuous monitoring pipeline with security deployment, architected by the author. Source.

If the disappointment of finding out AI isn’t a magic box that you put vibes into and extract money from is that painful, consider this an opportunity to reassess whether you have been sold a product. And whether some vulnerability in your value system may have helped enable the con. Some offense.

If I’m coming off harsh, it’s because I respect you too much to lie to you about what this tech can do, and the real requirements to do it.

But,,, if I can help just one business leader realize that investing in AI projects without the engineering infrastructure to support them is worse than taking that money to a casino?

I’ll count it as a win.

Because on a personal note, as a hacker and an engineer: My sense of justice is causing me extreme difficulty empathizing with anyone who would profit off others by misrepresenting a technology they’re selling.

Especially when that technology has the potential to be used in mission-critical applications, or ways that harm. Exactly what and where every AI vendor suggests we should deploy their products.

Stop Accepting Condescension, Start Building

IMO, this all stops when we as technologists stop accepting it, and demand better.

I know that sufficiently advanced technology is supposed to seem like magic or something, but aren’t we all getting tired of being condescended to like this? I cannot be the only one.

If a vendor’s tech is too complex to explain, THEY are the ones who don’t understand it.

If you can’t explain it to a five year old, that means your understanding is faulty. And CISOs aren’t five year olds.

We’re not being gaslit by “genius” anymore.

Make it make sense. Literally.

The Threat Model

AI is not a magic closed box full of money–it requires serious engineering to get into production, and get ROI.

The capabilities that productionize AI like monitoring, auditing, threat modeling, and supply chain management are also the ones that secure AI and help make it more responsible–ignoring one of these factors introduces complications for all the others.

The real risk to successful and secure AI deployments may be the hypesters who sold easy fixes, without honestly educating the enterprise on what deploying AI actually requires.

Resources To Go Deeper

Wazir, Samar, Gautam Siddharth Kashyap and Parag Saxena. “MLOps: A Review.” (2023).

Díaz-de-Arcaya, Josu, Juan López-De-Armentia, Raúl Miñón, Iker Lasa Ojanguren and Ana I. Torre-Bastida. “Large Language Model Operations (LLMOps): Definition, Challenges, and Lifecycle Management.” 2024 9th International Conference on Smart and Sustainable Technologies (SpliTech) (2024): 1-4.

Pahune, Saurabh and Zahid Akhtar. “Transitioning from MLOps to LLMOps: Navigating the Unique Challenges of Large Language Models.” Information (2025): n. pag.

Executive Analysis, Research, & Talking Points

Why AI Projects Fail, & One Quick Tip To Get The Them On Track

Back to the tech: Why are these projects failing? And what lessons can we apply to our Agentic and AI systems?

Keep reading with a 7-day free trial

Subscribe to Angles of Attack: The AI Security Intelligence Brief to keep reading this post and get 7 days of free access to the full post archives.