OpenAI Just Dropped Their Agentic Application: Three Ways The Attack Surface Changed, and Three Steps Leaders Can Take Now

OpenAI dropped their new Agentic AI product–and drastically changed their attack surface. Here’s how. | Edition 10

OpenAI’s newest product, ChatGPT agent, is allegedly truly Agentic. The Agent’s value is banked on assertions that it’s able to “fluidly [shift] between reasoning and action” to accomplish real-world tasks–including financial transactions–with accuracy.

Zero benchmarks are presented to validate this premise. But I’ll get to that later.

According to the official release, “ChatGPT now thinks and acts, proactively choosing from a toolbox of agentic skills” to “do work for you” by “handling complex tasks from start to finish”.

This precisely replicates the patterns found in Cloud Security Alliance’s Red Teaming Guide advice on Why Agentic AI Is Different. According to OpenAI, their agents:

Combine planning, reasoning, and acting

Operate across time, systems, and roles

Introduce emergent behavior– which, in turn, introduces expanded attack surfaces

Let’s break these down.

OpenAI Agents Combine Planning, Reasoning, And Acting

OpenAI says their agents can complete multi-step tasks, like searching for and booking a plane ticket, and maybe even buying an outfit for the trip.

While each of these may sound like a discreet–if tedious–task to humans used to completing the many steps involved often unconsciously, the reality is different for a machine.

A software program with no contextual understanding of the world requires incredibly precise instructions to understand its operating parameters. That’s why code exists: it’s a precise & repeatable way of specifying design instructions to a machine.

But Agentic systems, due to the LLMs that power them, do not run on code–they interpret natural language instructions.

Which means these systems have neither real world experience, nor any means of even interpreting something as precise as code.

They interpret natural language, plan in natural language, and take actions based on interpretations of–you guessed it–imprecise natural language, now additionally run through several interpretive filters. Filters of indeterminate quality, because due to the non-deterministic nature of the models, the instructions themselves will change with the repetition every instance of every task.

For mission-critical applications which require determinism in their outputs, this is the nightmare scenario: none of the contextual information or moral responsibility of a human, and none of the precision of a machine.

From an engineering perspective, it’s the worst of all worlds. Which, as an engineer, is never the optimization you want to be making.

Engineering always involves tradeoffs. Creatively and analytically solving problems within these constraints is, in my humble opinion, a major aspect of what makes engineering a fascinating, challenging, and honestly, fun career.

But what, exactly, is the problem being solved by an AI Agent that can maybe just buy you an outfit, or maybe make questionable financial or even legal decisions on your behalf, that you’re then responsible for? And what are the tradeoffs being silently made?

The answer lies in counting the steps.

Not physical steps: I’m talking about breaking each task down into the most granular phases in the task-completion process.

Because it’s in these steps where Agentic security failures happen–and multiply.

OpenAI Agents Operate Across Time, Systems, And Roles

The many steps of even these seemingly simple tasks add up quickly when we start to break down all the instructions that would be needed to explain these processes to a entity without context.

How many steps are really involved when you book a plane ticket? What’s the dimensionality of the problem? And what happens when we try to compress it into natural language?

If you’re trying to find a specific seat, route, or price point, you’ve already introduced multivariate complexity into the task. Even a date/time, plus location, plus one more variable (like seat location or price) convolutes the comparison.

Just adding even one more variable (route, layovers, etc) can make this one comparison aspect of this “single task” feel intractable. Which is probably why you might want assistance with it in the first place.

Still, our brains handle these comparisons as more or less routine–we’re just burning ATP made from glucose. For the machine, it’s all tokens.

Back to the challenge at hand. This reasoning task is not the only one in the queue.

Planning and execution for next steps must also take place–each with their own distinct, multistep process. Choosing the plane ticket was just one step.

Now our Agent has to navigate to the purchase page and ensure the correct parameters are set. This is actually many steps, including checking multiple input fields for accuracy, the logic of which will be communicated via natural language as both data and instructions within the same channel. Yikes.

The potential for mistakes multiplies.

Assuming all that happens correctly, now the system must enter purchase information (credit cards, any identification verification required), which introduces more vectors for theft and fraud.

What happens when your AI Agent gets tricked into entering your personal data into a site that’s slopsquatting something hallucinated into the underlying model’s reality?

What happens when an AI Agent makes an unexpected–and legally binding–decision on your behalf?

Who bears responsibility for actions taken by Non Human Identities? Does any AI Agent you deploy carry the implication of your consent? What will be the first emergent behavior to challenge this? What impacts will it have?

These are basic engineering design questions that really just scratch the surface. And OpenAI has demonstrated answers to none of them. Even they admit they don’t know.

Just release, and hope for the best.

OpenAI Agents Will Introduce Emergent Behavior

Because of the nearly infinite ways to trick or confuse these models, guardrails will always be insufficient to prevent emergent behaviors.

What might these behaviors look like? Impossible to say, because (again) the potential threat vectors are nearly limitless.

What we can do to model potential threats is look at the Agency granted to Agentic systems–and the risk vectors for the task areas associated with the relevant phase of completion.

For example, when an Agent is tasked with entering a credit card number into a payment field, what can go wrong? Are we risking a fraudulent transaction, a stolen credit card–or something worse?

What happens when we give Agents more autonomy?

The question is not if these Agents will demonstrate emergent behaviors–it’s how serious the consequences will be, and how prepared we’ll be to prevent or mitigate them.

Benchmarks Designed To Signal “Smart”, Not Secure

Bizarrely, other than an awkward acknowledgement that prompt injection remains intractable, OpenAI chose to mostly ignore these issues in their release, focusing instead on arcane academic benchmarks seemingly unrelated to any real-world Agentic task.

For example:

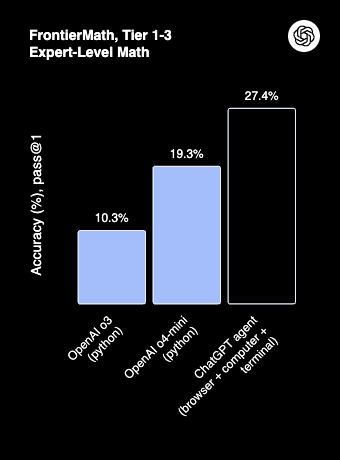

“FrontierMath** is the hardest known math benchmark, featuring novel, unpublished problems that often take expert mathematicians hours or even days to solve. With tool use, like access to a terminal for code execution, ChatGPT agent reaches 27.4% accuracy…”

Ok?

OpenAI’s security marketing slogan, apparently: Great news everybody, this model scored 27% on a math test–so go ahead and give it all your financial and personal information!

OpenAI’s Agentic system took a math test, which is cool I guess? Source: OpenAI

Keep in mind, these are Agentic systems with real-world consequences. Security and privacy are on the line in ways they never have been before.

So I don’t want to see evidence of marginal improvements in some abstract, contrived tests for mathematicians–I want to assurance that actions are logged, that privacy is deterministically protected, and that security of a system where data and instructions are delivered through a single channel by design has been addressed.

Spoiler: It has not. Unless we’re all missing something here, online math tests do not somehow correlate to security and privacy preservation.

And that is likely a big part of why OpenAI has chosen to focus on weird abstractions for “smart” in the public perception, versus real engineering specs for what should be mission-critical level design.

The Threat Model

AI Agents combining Non-Human Identities with non-deterministic behavior will make financially and/or legally binding decisions non-deterministically.

Multi-step Agentic processes introduce the potential for amplifying cascading failures across component systems.

Agents’ emergent behaviors in real-world interactions will create unforeseen real-world consequences.

Resources to Go Deeper

Deng, Zehang, Yongjian Guo, Changzhou Han, Wanlun Ma, Junwu Xiong, Sheng Wen and Yang Xiang. “AI Agents Under Threat: A Survey of Key Security Challenges and Future Pathways.” ACM Computing Surveys 57 (2024): 1 - 36.

Wu, Fangzhou, Ning Zhang, Somesh Jha, Patrick Drew McDaniel and Chaowei Xiao. “A New Era in LLM Security: Exploring Security Concerns in Real-World LLM-based Systems.” ArXiv abs/2402.18649 (2024): n. pag.

Executive Analysis, Research, & Talking Points

Three Keys To Mastering The Agentic Threat Landscape

The Agentic threat landscape is large, novel and rapidly expanding. But there are proactive steps leaders can take right now to respond. Here are three strategies to start proactively managing your threat surface:

Keep reading with a 7-day free trial

Subscribe to Angles of Attack: The AI Security Intelligence Brief to keep reading this post and get 7 days of free access to the full post archives.