We Need To Talk About Real Engineering

AI applications are now mission-critical. So why isn’t the engineering that powers them? | Edition 24

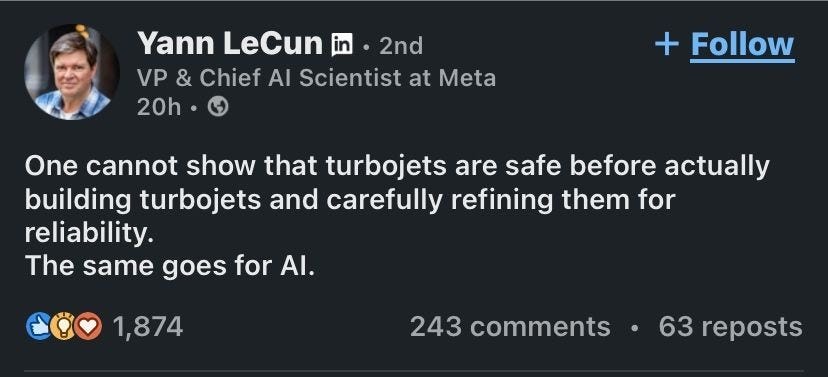

It all started with a bizarre comment from Meta’s Yann LeCun, attempting (for some reason) to analogize AI development to aerospace engineering.

Maybe it was to grant the sheen of “real engineering” to the ad-hoc, often disastrous AI development & release process at Meta. I’m not a mind reader, but this seems to be the most likely explanation to me.

In it, LeCun glossed over a few important facts:

The mathematics and physics of jet engines are well understood long before they’re built.

The physics that govern jets are tested via models and digital twins long before hundreds of millions of dollars are invested in prototyping.

You don’t just strap any jets onto any airframe and hope for the best; engines are developed in consideration of the airframe/s they’re intended for.

When you’re testing a jet for real-world safety, you don’t put human lives on the line.

All of these assumptions break when it comes to AI development.

This is not even to mention the lack of engineering rigor that goes into AI prototyping itself. I’m effectively just talking about release processes here.

And it gets even worse, because all of this erases the very real and very serious field of safety-critical software engineering.

Meaning: the lack of engineering rigor in AI is real, and runs deep.

This is of course reflected in the fact that a high-ranking “scientist” at a (supposedly) major AI industry player seemed to not only ignore the discipline of safety-critical software engineering entirely, but also think it was fine to evoke aerospace engineering itself as an analogy to Meta’s infamous move-fast-and-break-things ethos.

Sir, you’ve got to be kidding.

We’ve witnessed some of the most famous names in this space reveal their lack of engineering chops on the global stage more than once. So this is not a surprise.

But to drag aerospace engineering into this shoddy, immoral, lazy, arrogant argument without doing even the most cursory research? This goes beyond embarrassing–it’s dangerous.

I don’t care what mental gymnastics you’re willing to do on LeCun’s behalf.

I’m not going to sit back and allow the field I love to be held back, or more people to be harmed, by this kind of ignorance any further.

Software Engineering Isn’t Real Engineering Yet–But It Should Be

The classic engineering disciplines have sneered at computer scientists & coders calling themselves engineers for quite some time.

Do they have a point? Depends on the industry.

Software engineering for aerospace is conservatively developed, well-defined, and rigorously applied principles and practice.

It’s why commercial passenger aircraft can be operated transglobally by a two-person crew. It’s why planes don’t usually fall out of the sky.

And when it fails–as was the case with the Design Assurance Level assignment failures in the Boeing 737 Max–catastrophic loss of human life can occur.

These losses come with financial and reputational costs, with potentially massive scale.

But no one has more of an interest in air safety than the people who operate these aircraft daily.

That’s why it matters that the Airline Pilots Association International (ALPA) have publicly come out in opposition to Boeing’s recent requests for regulatory exemptions for software components similar to those whose failure brought down two 737 Max aircraft full of passengers.

When the pilots’ union starts making statements about software, you should pay attention.

Yann LeCun apparently did not.

Meanwhile, In Menlo Park

Software engineering for aerospace is rigorous and treated as a mission-critical component.

Software “engineering” in Silicon Valley is hackathons filled with 20-somethings desperate to be the next Big Genius before they become over the hill at 30 (eyerolls warranted), while high on AI copium and whatever fad is the “new” coffee/alcohol/weed/microdosing.

If you’ve spent more than 15 minutes in the city or the peninsula, you know I’m right. And you either love it or hate it. But let’s call a thing what it is.

Don’t get me wrong, the tech culture in San Francisco et al is brutal and exhilarating. You’re playing with the big kids there, and the level of social gamesmanship just has to be higher.

If you want to learn how to play the startup game, there’s really no other place to be. Don’t @ me, New York.

But we are not going to pretend like this place or its culture are rigorous, serious or conservative.

Let’s be real. San Francisco is the Ivy League of startup cities–you go there to network, not learn things.

I said what I said.

My most charitable guess is that these people have been told they’re geniuses for so long that they started to believe it–and thought the rules of the science they claim to admire so much don’t apply to them.

That’s less dark than believing that they understand full well that the products they make now carry societal and personal human consequences in their failure modes–and they just don’t care.

Yann Fails At Scale

I’m uninclined to be charitable in my interpretations to a man whose entire claim to authority is that he’s a genius researcher.

I’m not going to do the work of filling in what he “must have meant” for him. I have more self esteem than to assume a man is smarter than me because of a title, and I recommend others adopt this mindset as well when dealing with AI researchers.

And also because even under the best of interpretations, this comment presented without context implies that the post-design testing of jets is analogous to how AI systems are tested. And this is simply–and devastatingly–not true.

We don’t strap a couple of jet engines onto any random airframe, load it up with passengers and crew, and then shoot it into the sky over a major city just to see what happens.

But that’s exactly what Meta does with its AI.

Actually, I take that back. A passenger jet typically holds, at most, a few hundred people.

Per Meta’s Second Quarter 2025 Results Report, their products are used by more than 3.4 billion users daily, representing a 6% year-over-year increase.

3.4 billion. And growing.

So it’s far worse than a bad analogy: It’s an utter misunderstanding of the mathematics of scale.

And an AI researcher employed at any level at a major company should absolutely know better.

Jet engines are rigorously tested, at every stage of design, with zero passengers involved. AI systems are routinely launched onto billions of users, with testing post hoc if at all.

There is no possibility of analogizing aerospace engineering processes to current SOTA AI development.

But there should be.

The Costs Mount–Pay Now Or Later

What do I see when I look at those numbers?

Innovation? Profit?

No.

I see the impending class action lawsuits.

If I were an attorney, I wouldn’t be using AI to fake cases, I’d be learning every damning fact I could about AI security and these companies’ willful negligence–and opening another bank account to cash in when the lawsuits begin.

Just my opinion.

And if I were a CDO or related role at any of these companies, well…I wouldn’t be. Let’s just say that.

As we witness the lawsuits beginning to roll in for AI products that are being tested at scale, on unsuspecting populations, by people who claim to be the smartest kids in the room while refusing to do even the most basic reading, we have to wonder: When is enough going to be enough?

AI applications are safety-critical now. Their engineering should be, too.

The Threat Model

Testing products by launching them onto users at scale would be considered criminal misconduct in most engineering disciplines; that it isn’t for AI applications demonstrates how regulations can lag behind the SOTA.

There is currently no analogy between the discipline of aerospace engineering and SOTA AI development, but because of the ways AI touches human lives, there should be.

The full societal and organizational costs of this gap are nowhere near visible–yet.

Resources To Go Deeper

Beyer, Michael, Andrey Morozov, Kai Ding, Sheng Ding and Klaus Janschek. “Quantification of the Impact of Random Hardware Faults on Safety-Critical AI Applications: CNN-Based Traffic Sign Recognition Case Study.” 2019 IEEE International Symposium on Software Reliability Engineering Workshops (ISSREW) (2019): 118-119.

Kelly, Jessica, Shanza Ali Zafar, Lena Heidemann, João-Vitor Zacchi, Delfina Espinoza and Núria Mata. “Navigating the EU AI Act: A Methodological Approach to Compliance for Safety-critical Products.” 2024 IEEE Conference on Artificial Intelligence (CAI) (2024): 979-984.

Khedher, Mohamed Ibn, Houda Jmila and Mounîm A. El-Yacoubi. “On the Formal Evaluation of the Robustness of Neural Networks and Its Pivotal Relevance for AI-Based Safety-Critical Domains.” International Journal of Network Dynamics and Intelligence (2023): n. Pag.

Executive Analysis, Research & Talking Points

Why Saving The Industry Means Killing The Old Expertise

AI research is heading for a cliff, and everyone who knows what’s up, knows it.

In my view, this is a generational divide: Between an old guard, expecting to be revered as rare, esoteric geniuses playing G-d; and a new generation living in the reality of these systems’ applications in society.

And these two viewpoints are fundamentally incompatible. One has to go.

Keep reading with a 7-day free trial

Subscribe to Angles of Attack: The AI Security Intelligence Brief to keep reading this post and get 7 days of free access to the full post archives.